(Almost) Vibe Coding a RAG

Why I didn't build a RAG and why you shouldn't too

Like the ADHD procrastinator I am, the temptation of a side project to optimize my main project got the best of me. I was having to read through a lot of PDFs and was running out of patience and free AI credits. So I took it upon me to build and host a personal RAG. I embraced the vibe coding lifestyle and started a new project in cursor when it suddenly occurred to me: I’ll have to look at react code for the frontend. React being my Kryptonite, I quickly started looking into alternate solutions, here’s what I found:

The Backend

The number of options for an LLM engine fascinated me but the two that stuck out to me were Ollama and LocalAI. I could say I decided with Ollama because of better documentation and integration but actually it was the cool logo.

The Interface

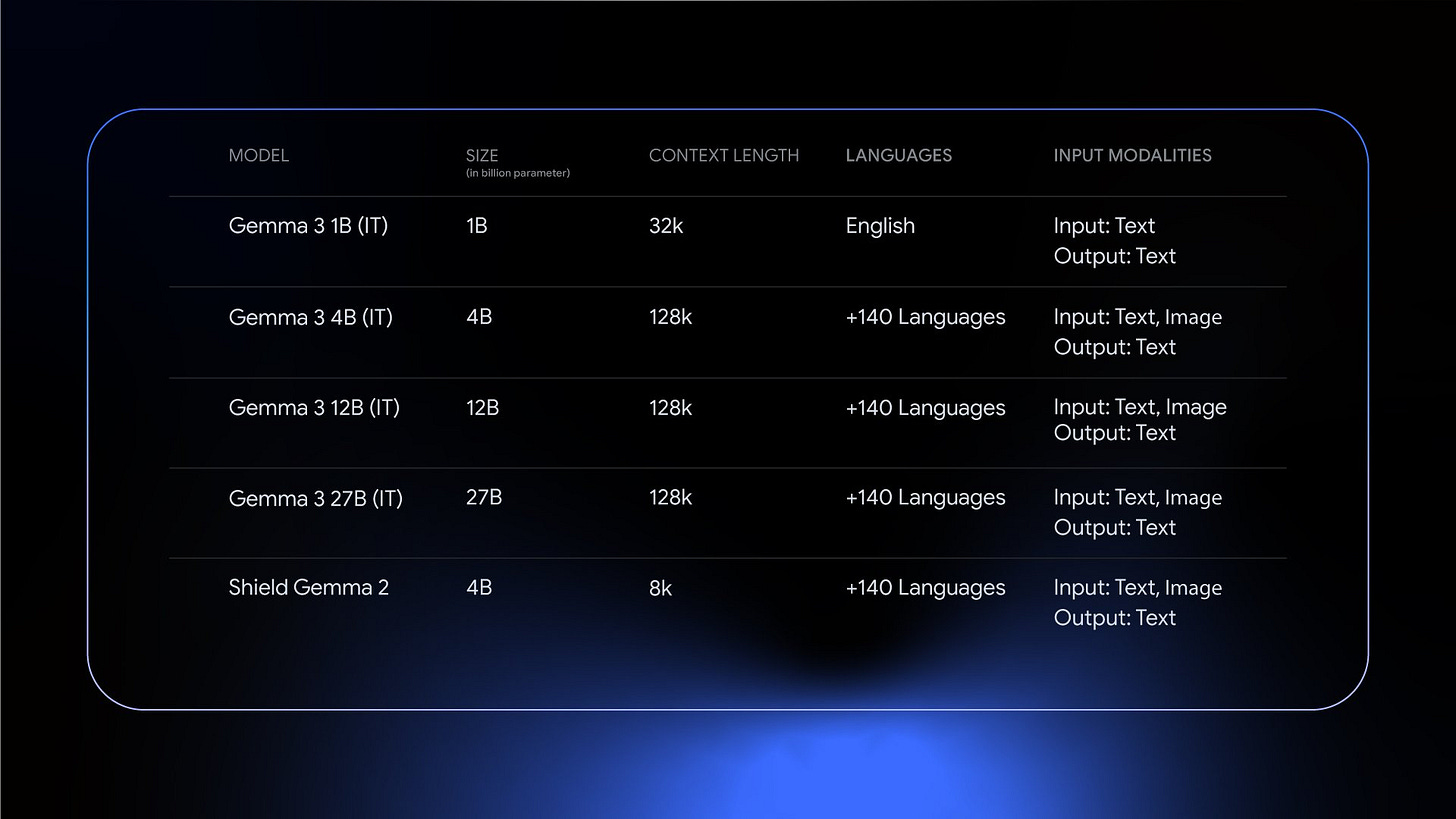

After experimenting with LMStudio and OpenWebUI I decided with OpenWebUI. I really liked the interface of LMStudio, but found it lacking in features like function calling and tools. The web-based architecture also made a convincing case for using OpenWebUI as it’d allow me to deploy on a container and access the UI from any of my devices.

The Model

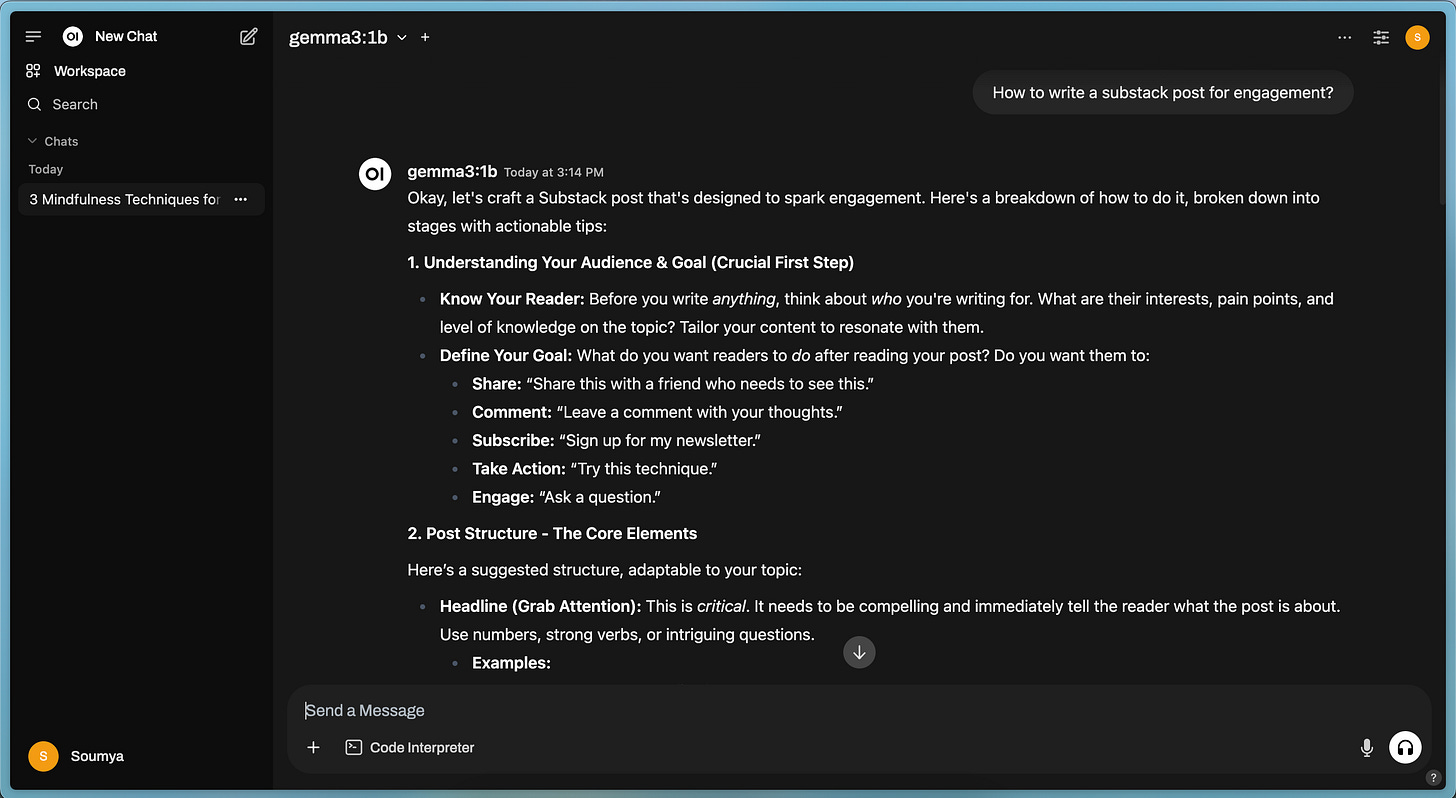

I started my tests with llama3.2 but found it’s lack of multimodality very restrictive. DeepSeek R1 made waves as the best open source model, but in my tests I found it slow to inference on my M2 Macbook Pro for the 7b model, realising that it choked my hardware, I immediately switched to the 1.5b model and saw improvements but the reasoning was an overkill for my task and it generated the final response slower than if it wasn’t. I was considering using Quen when I saw the new Gemma 3 family of models. They seemed really interesting because of both the multimodality and the longer context size I desired for my longer PDFs. I finally decided to install both Gemma3 4b and 1b models alternating between the two based on whether I needed multimodality. While the 1b model is severely limited in terms of languages supported, context window and multimodality, it makes up for it in speed. Here’s a comparision between the different models:

The Parameters

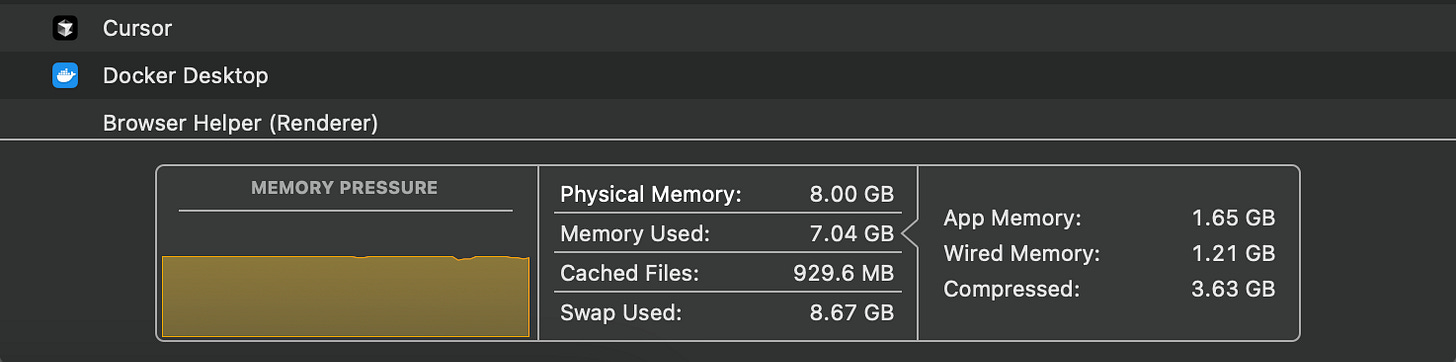

After experimenting with all the knobs and dials, I decided the default config was good enough for me except for two factors. The default context window of 1024 tokens is too limiting, I didn’t push it to the max of 32k, but used a moderately high value of 16k. The default batch size of 512 is good for most use cases, but to speed up execution, I decided to extend it to 2048 after running extensive memory evaluations. These two changes made the end resulting system quick as my reading speed while still being detailed enough to be useful. While it still utilises significant memory from my constrained laptop, it still doesn’t cause any failures for my other parallel workflows.

Knowledge Augmentation

OpenWebUI let’s one add documents and collections to reference for RAG tasks. While this is very straight forward to do if it is hosted locally, having a container image can make it slightly more tricky. To navigate around this I created a synced folder in my NAS that triggers an event to copy whatever is pasted there to the docker container. Alternatively one can also create a knowledge base from the WebUI knowledge section.

Final Verdict

OpenSource is a superpower, and I’m starting to appreciate the power of pre-built components. It’s a reminder that sometimes, the best solutions aren’t built from scratch – they’re built around existing tools and a willingness to experiment. This whole endeavor has been a surprisingly good exercise in digital minimalism – a chance to reclaim some time and focus on what truly matters: actually understanding the information I’m working with. Now, if you’ll excuse me, I’ve got a few more PDFs to wrestle with… and maybe a slightly less frantic approach to my coding.